Spotted on the ArXiv Physics blog, what about using the SKA array and the Moon as a collector, that would certainly qualify as sensors the size of a planet :

Lunar detection of ultra-high-energy cosmic rays and neutrinos by J. D. Bray, J. Alvarez-Muñiz, S. Buitink, R. D. Dagkesamanskii, R. D. Ekers, H. Falcke, K. G. Gayley, T. Huege, C. W. James, M. Mevius, R. L. Mutel, R. J. Protheroe, O. Scholten, R. E. Spencer, S. ter Veen

The origin of the most energetic particles in nature, the ultra-high-energy (UHE) cosmic rays, is still a mystery. Due to their extremely low flux, even the 3,000 km^2 Pierre Auger detector registers only about 30 cosmic rays per year with sufficiently high energy to be used for directional studies. A method to provide a vast increase in collecting area is to use the lunar technique, in which ground-based radio telescopes search for the nanosecond radio flashes produced when a cosmic ray interacts with the Moon's surface. The technique is also sensitive to the associated flux of UHE neutrinos, which are expected from cosmic ray interactions during production and propagation, and the detection of which can also be used to identify the UHE cosmic ray source(s). An additional flux of UHE neutrinos may also be produced in the decays of topological defects from the early Universe.

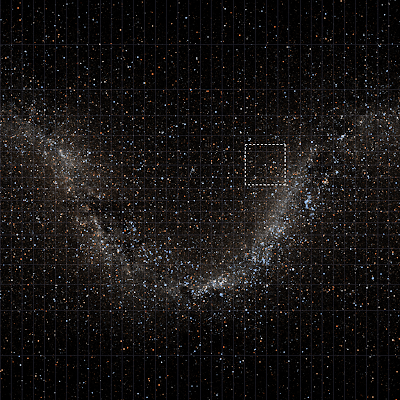

Observations with existing radio telescopes have shown that this technique is technically feasible, and established the required procedure: the radio signal should be searched for pulses in real time, compensating for ionospheric dispersion and filtering out local radio interference, and candidate events stored for later analysis. For the SKA, this requires the formation of multiple tied-array beams, with high time resolution, covering the Moon, with either SKA-LOW or SKA-MID. With its large collecting area and broad bandwidth, the SKA will be able to detect the known flux of UHE cosmic rays using the visible lunar surface - millions of square km - as the detector, providing sufficient detections of these extremely rare particles to solve the mystery of their origin.